The Rising Costs of HBM4: Implications for AI Graphics Cards

Summary:

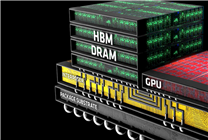

- The performance of AI graphics cards is significantly influenced by both GPU and HBM chip technology.

- Upcoming HBM4 chips are expected to drive costs sharply higher, with significant implications for data centers.

- Power consumption and heating issues related to advanced HBM technologies pose additional challenges.

On September 27, recent insights from industry sources highlighted a notable trend in AI graphics card technology: the pivotal role of HBM (High Bandwidth Memory) chips alongside GPUs in determining performance. As we look forward to the launch of a new generation of GPUs integrated with HBM4 technology, industry experts predict a dramatic increase in prices, raising important questions for tech enthusiasts and businesses alike.

HBM technology first entered the market approximately a decade ago with the AMD Fury series graphics cards. Initially launched as a high-performance solution for consumer graphics, its prohibitive cost limited its widespread adoption. However, in recent years, it has gained traction in data centers, evolving through four generations, with HBM3, HBM3e, and the imminent HBM4 leading the charge in performance enhancements and capacity.

The advancements in HBM technology are evident. Each generation has not only vastly improved performance but also significantly increased costs. For instance, the price of 1Gb HBM chips has risen over the years: HBM2/2e was priced around $8, HBM3 at $10, and HBM3e at $12. However, these prices reflect a two-year-old market analysis, with projections indicating that in 2025, HBM3 will drop to approximately $8.1, while HBM3e increases to $13.6, and HBM4, which is set for mass production this year, is expected to reach around $16.

Importantly, the cost implications are substantial. The expenditure for 1GB of HBM4 capacity can exceed $128 (approximately 1,000 yuan), highlighting the financial burden on manufacturers. Although estimates suggest that prices for 1Gb chips could decrease to around $15.1 next year, the overall investment remains high. AI graphics cards in the HBM4 era commonly require capacities exceeding 300GB; AMD’s MI450 series, for example, has achieved an impressive 432GB capacity, making the total cost for HBM chips alone skyrocket to $50,000—surpassing the price of standard GPU chips.

Furthermore, the anticipated HBM4e is expected to reach staggering capacities of up to 1TB, with production costs potentially exceeding $100,000. This significant investment is likely to strain budgets for data center operations, pushing the limits of affordability.

While financial considerations are paramount, technical challenges also accompany these advancements. HBM technology, with its highly integrated design, faces critical issues related to power consumption and heat generation. As the capacity increases, so does the complexity of the chip structure, leading to enhanced power demands that could surpass even that of the GPU itself. This escalation in power consumption not only affects performance but also amplifies the operating costs associated with maintaining data center infrastructure.

In conclusion, as the landscape of AI graphics card technology evolves, the implications of rising costs associated with HBM4 and its successors will resonate throughout the industry. Manufacturers and consumers alike must prepare for the shifts this technology will bring, not only in terms of economic impact but also in navigating the engineering challenges that lie ahead. As we advance, careful consideration and strategic planning will be essential to leverage the full potential of these transformative technologies.