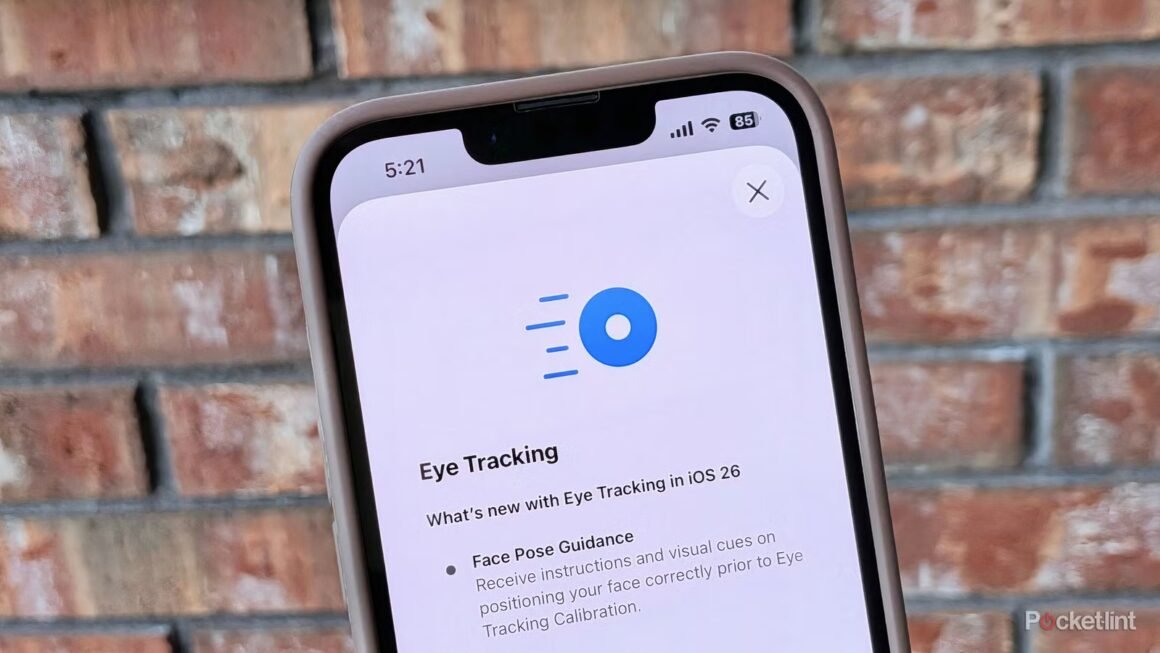

Apple has introduced Eye Tracking technology on the iPhone 12 and newer models, available now for those running iOS 18 or above. The feature allows users to control their device hands-free using eye movements, making it a notable addition, especially for individuals with motor disabilities.

This technology could be particularly significant for those looking for innovative ways to interact with their devices. As touch screens have evolved, the expectation for new interaction methods has risen. The global availability of eye tracking on recent iPhone models opens up options for accessibility, especially for users who struggle with traditional input methods. However, it’s essential to note that this feature is still primarily designed as an accessibility tool rather than a standard navigation option.

In the current market, the Eye Tracking feature positions itself uniquely among smartphone capabilities but isn’t a mainstream input method. Alternatives like voice assistants or stylus tools exist, such as Samsung’s S Pen or Google Assistant, which cater to different user needs. Some specialized devices, like switches for accessibility, can facilitate interactions in ways that may suit users better than a potential learning curve with Eye Tracking. Price points for these alternatives can vary significantly, from budget options around $50 to premium devices priced over $100, making it imperative to consider individual needs and preferences.

Ultimately, Eye Tracking on the iPhone is a promising technology, especially for users needing hands-free accessibility options. However, it may not suit everyone, particularly those who prefer the traditional touch interface or who find great utility in more established input methods. If you prioritize intuitive, immediate interaction over exploring new technologies, you might find a stylus or voice command system a more reliable choice.

Source:

www.pocket-lint.com